Yesterday’s post contained lots of details about Apple II copy-protection and the minutiae of 5.25 inch floppy disk data recording. It mentions that bits are recorded on the disk at a rate of 4 µs per bit. This is well-known, and 4 µs/bit appears all over the web in every discussion of Apple II disks. This number underpins everything the Floppy Emu does involving disk emulation, and has been part of its design since the beginning. But as I learned today, it’s wrong.

Sure, the rate is close to 4 µs per bit, close enough that disk emulation still works fine. But it’s not exactly right. The exact number is 4 clock cycles of the Apple II’s 6502 CPU per bit. With a CPU speed of 1.023 MHz, that works out to 3.91 µs per bit. That’s only a two percent difference compared with 4 µs, but it explains some of the behavior I was seeing while examining copy-protected Apple II games.

With the disk spinning at 300 RPM, it’s making one rotation every 200 milliseconds. 4 µs per bit would result in 50000 bits per track, assuming a disk is written using normal hardware with a correctly calibrated disk drive. 50000 is also the number of bits per track given in the well-known book Beneath Apple DOS. But it’s wrong. At 3.91 µs per bit, standard hardware will write 51150 bits per track.

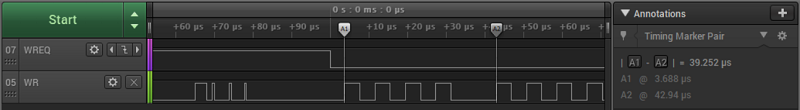

Not content to trust any references at this point, I measured the number directly using a logic analyzer and a real Apple IIe and Apple IIgs. When writing to the disk, both systems used a rate of about 3.92 µs per bit. Here’s a screen capture from a test run with the Apple IIe, showing the time for 10 consecutive bits at 39.252 µs. There was some jitter of about 50 nanoseconds in the measurements, and measuring longer spans of bits revealed an average bit rate of about 3.9205 µs. That’s a tiny difference versus 3.91 µs. Can I say it’s close enough, and let it go? Of course not.

The Apple II’s CPU clock is actually precisely 4/14ths the speed of the NTSC standard color-burst frequency of 3.579545 MHz. (This number can be derived as 30 frames/sec times 525 lines/frame times 455/2 cycles/line divided by a correction factor of 1.001.) 3.579545 MHz times 4 divided by 14 is 1.02272714 MHz. Rounded to three decimal places that gives the advertised CPU speed of 1.023 MHz. But using the exact CPU frequency, four clock cycles should be 3.91111 µs. I can’t explain the remaining discrepancy of roughly 0.01 µs compared with my measurements, but I’ll chalk it up to measurement error.

But wait! In the comments to the answer to this Stack Exchange question, it’s mentioned that every 65th clock cycle of the Apple II is 1/7th longer than the others, because of weird reasons. That means the effective CPU speed is slower than I calculated by a factor of 1/(65*7). In light of this, I calculate a new average CPU speed of precisely 1.020479520466562 MHz, and a time for four clock cycles of about 3.9197 µs. That’s a difference of only 0.0008 µs from my measurement – less than one nanosecond. Ah ha! So everything makes sense, and my measurements were correct.

The difference between 4 µs per bit and 3.92 may seem like a minor detail, but for a floppy disk emulator developer, it’s like suddenly discovering that the value of pi is not 3.14159 but 3.2. My mind is blown.